Bluetooth, PipeWire and Whatsapp calls

Whatsapp is, unfortunately, the de-facto messaging app today, especially among older people. When someone calls me and I'm on the desktop (the majority part of the day), I need to perform the following steps:

- Remove the headphone

- Pick up the call and tell the caller to wait for a second

- Search for the wired headset (I don't like to speak directly through the phone)

- Plug the wired headset into the phone

- Start talking

It would be less time consuming if I could simply pick up the call and use the microphone and the headphone connected to the computer via Bluetooth. The steps to answer a call would be:

- Pick up the call

- Start talking

So, this post is about my experience of making these Whatsapp calls work on a Linux computer with PipeWire. Honestly, this doesn't bother me that much, but I'm using it as an excuse to learn more about these technologies. It's one of the cases where the journey is the reward, not the destination.

Yet another Audio recap

Computers are binary beings. They're not smart enough to understand anything out of the 1s and 0s realm.

Humans then need to develop creative abstractions to provide meaning to these numbers.

One byte may represent a character inside an ASCII table.

Three bytes may represent a colour in the RGB colour model.

It's no different for digital audio.

It's possible to convert the analogue signal into binary data using a process called PCM (Pulse-code modulation). The quality of the audio depends on some characteristics of these numbers, which can be:

- Sample rate: Amount of samples of the incoming signal per second. The higher, the better the sound quality will appear when played. A typical sample rate is 44.1 kHz (44100 numbers per second) for high-quality audio, but telephony generally systems use 8 kHz, and a skype call uses 16 kHz to save space. The sample rate is one of the biggest reasons listening to Spotify sounds better than hearing your grandmother on the telephone.

- Mono or Stereo: A channel is a stream of recorded sound. Mono audio has a single track, and stereo has two. Having an additional channel produces a more immersive experience for listeners. You can be sure that this is stereo audio when you listen to a guitar in your right ear only and the bass in your left ear with a headphone.

- Bit depth: Number of bits of each sample. The higher, the better sounding it will be. Generally, digital audio has 16 bits (values that range from -32,768 to +32,767) or 24 bits.

To grasp how computers manipulates PCM data, let's see a commented ruby program that produces a one-second white noise sound, famous for soothing babies (and some adults).

SAMPLE_RATE = 44_100 BIT_DEPTH = (-(2**15)..(2**15-1)) # samples is a 44100 list of random values # The randomness is the characteristic of a white noise samples = SAMPLE_RATE.times.map { Random.new.rand(BIT_DEPTH) } # Converting the list of Ruby integers to Little-Endian binary binary_samples = samples.pack("s<*") # Write binary to a file File.open("/tmp/noise.raw", "wb") { |f| f.write(binary_samples) } # Use ffplay from ffmpeg specifying that the PCM data contains: # - 16 LE bit-depth # - 44.1 kHZ of sampling rate # - Only one channel (mono) puts `ffplay -f s16le -ar 44100 -ac 1 /tmp/noise.raw`

One downside of PCM data is that it's costly to transmit and store. For example, a song with 3 minutes uses at least 31 megabytes (180 seconds * 44100 sample rate * 2 channels * 16-bit depth / 8 bits). That's when codecs (Coder/Decoder) enter the stage. They use mathematical algorithms to represent PCM data with fidelity but generate way fewer data in return.

Interestingly, other domains also applies this compression. JPEG or PNG for image files and gzip for files, for example, decreases significantly the size of this data. Again, humans create algorithms in computers to solve a constraint or limitation.

Bluetooth

Bluetooth is a technology created in the late 1990s for devices to communicate with a low power consumption over short distances. It's also a somewhat unreliable technology that has pissed people ever since. I think everyone ever faced an issue with pairing a device at least once.

Understanding the Bluetooth stack and how it transfers audio might be overwhelming with lots of abbreviations, but fear not, we'll get there.

Low-Energy Bluetooth is a different beast, and it's out-of-scope of this tutorial. This post only discusses Classic or BR/EDR

Profiles, profiles, profiles

With a headphone, it's possible to listen to high-quality audio, but also talk and listen to phone calls, and pause the music you're listening with its buttons. A profile represents each of these functions and is the cornerstone of allowing two devices to communicate via Bluetooth.

The profiles have multiple use cases, from exchanging files, printing, and sharing images. Each one has its own specification written by a consortium of companies named SIG (Special Interest Group), similar to W3C guiding the web standards. In this brief overview, we'll focus only on the relevant profiles we'll use for the calls: A2DP (Advanced Audio Distribution Profile) and HSP/HFP (Headset Profile/Hands-Free Profile).

A2DP is a profile used to stream high-quality audio. It supports dual-channel audio and the usual good quality 44.1-48 kHz, and it's mainly used for listening to audio. Within A2DP, a device can have two roles: as A2DP Sink when sending audio or A2DP Source when receiving it.

However, A2DP has a drawback. It only allows unidirectional audio, and placing phone calls using this profile is not possible. For a device to act as a cell phone, it needs to implement either the Headset or the newer Hands-Free profiles (HSP or HFP). Both have the same core features, but HFP supports some additional features, such as the last number redial. The device acting as the handset is the HFP Hands-Free and the one receiving the audio is the HFP Audio Gateway. In our case, Pixel 3 will act as the HFP Hands-Free, and the computer plays the HFP Audio Gateway role. But, using HFP for audio is not always the best option because its quality is sub-par. For example, it only supports mono audio and a sample rate of 16k Hz.

Based on these characteristics, A2DP was the profile used between the computer and the headset (Bose QC35 ii) and HFP was used between the computer and the smartphone (Pixel 3).

Codecs to the rescue

Bluetooth is unsuitable for transmitting heavy PCM data because a channel can't stream the necessary throughput. Both devices agree to use the same codec to transmit compacter data. The device that sends audio compresses the PCM audio with the codec, and the receiving device decompresses it into PCM to play it.

A2DP codecs

Devices willing to implement A2DP may offer several codecs, like SBC, AAC, aptX HD or LDAC. This excellent blog post goes into depth about the particularities of each one of these. The Bose headset only supports two codecs, namely SBC and AAC, so these are our options.

SIG created SBC (Low-complexity sub-band codec), a mandatory codec, so there is no risk of one device not talking with another because they don't implement the same set of codecs. SBC is very flexible and might provide a poor performance out of the box, but that wasn't my experience with Pipewire.

AAC (Advanced Audio Encoding) is a popular codec found in many videos and music on the web. In addition, Apple products, such as macOS, iOS, iTunes, and Apple Music, are famous for this codec support. It is less configurable than SBC, but it provides a better audio experience in theory.

For the Whatsapp calls, I chose AAC because it's the default after connecting to the headphone, but both would fit because my ordinary ears can't notice a difference between them.

HSP/HFP codecs

HSP/HFP codec choices are stricter. The CVSD codec supports only audio at 8 kHz and the mSBC (wideband speech) at 16 kHz, with a single channel.

SIG only mandates devices to support the poorer CVSD, not mSBC. That's why the Bose headphone only supports CVSD, and the Linux computer needs to be the bridge between the smartphone and the headphone. If mSBC codec was supported, I could simply pick up the call via the headphone connected to the smartphone directly.

Using CVSD is a no-no because the audio is terrible for the caller and me, especially considering I talk with older relatives. Therefore, mSBC codec is the way to go.

Bluetooth protocol stack

Besides defining the functionality of the profile, SIG also specifies how devices should make the sausages. A staggering three thousand page PDF document called core specification defines the lower level protocols of how devices must communicate with each other, from the transport to the physical layer. Drawing a parallel with TCP/IP protocol, it would be the same as if TCP, IP and Ethernet specifications were all in the same PDF document.

Controller layer - The lowest layer

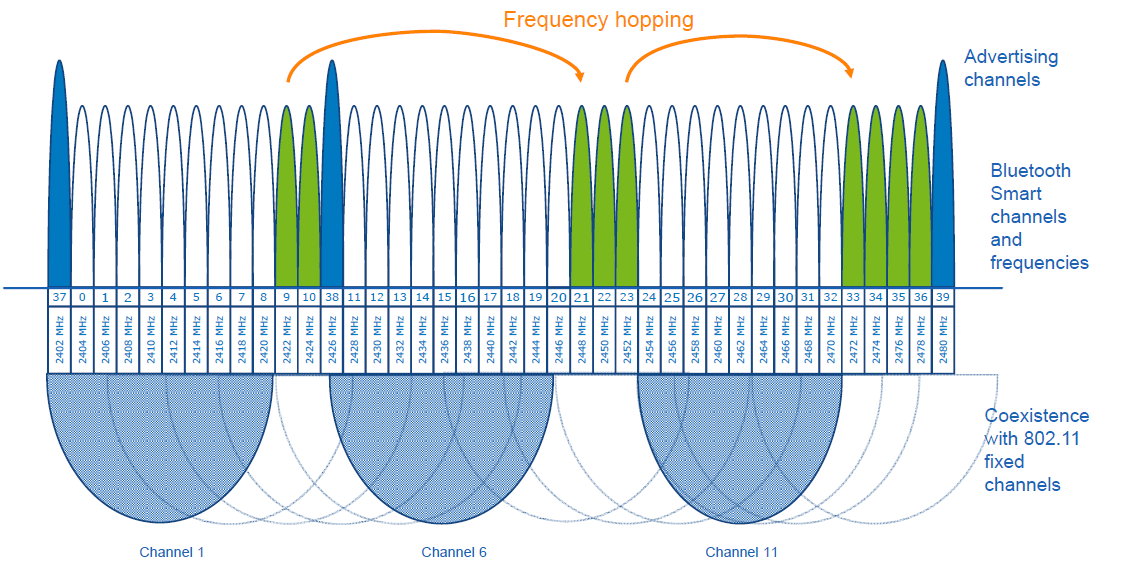

Classic or BR/EDR (Basic Rate / Enhanced Data Rate) Bluetooth operates on the 2.4 GHz band and has adjacent channels to avoid signal interference, just like Wifi. Did you ever need to switch manually among the eleven channels on your router to run away from "crowded spaces"? In Bluetooth, this frequency hopping may happen hundreds of times every second among their 79 channels, and each of these hops switches the channels pseudo-randomly every 0.625ms (1600 times per second).

Image with coexistence from Wifi and Bluetooth. This image shows the 39 channels of LE Bluetooth, but Classic Bluetooth uses 70 channels instead. The idea is the same, though. Taken from Microchip developer docs.

The clock of one of the devices called Central decides which channel to switch. All the devices following this hopping pattern are called Peripherals. The throughput can be 1Mbps (Basic Rate), 2Mbps or 3 Mbps (Enhanced Data Rate).

This video from Branch Education goes more in-depth about how the Bluetooth physical layer works. Actually, all the videos on this channel are superb and are worth a look.

The logical layer sits above the physical layer. It is responsible for managing the connections among devices, assigning which device is the central and the peripheral, and converting the raw bytes from the physical layer into frames. Ethernet has a similar structure with its physical and link layer. Three types of links can be established, ACL (Asynchronous Connection-Oriented), SCO (Synchronous Connection-Oriented) and eSCO (extended Synchronous Connection-Oriented).

SCO links reserves a certain amount of slots to guarantee a constant transmission rate. Besides having the same reserved slots, the newer eSCO links support a retransmission window to offer more reliability to the connection. Practically, bidirectional audio; a.k.a phone calls, uses SCO and eSCO.

The ACL links use the remaining slots not used by SCO/eSCO and leave the most complex part of multiplexing and ordering to a protocol in an above layer called L2CAP. But we'll get there eventually. ACL is used basically for everything else that's not a voice call, like listening to music, moving the mouse or even doing the handshake of the SCO/eSCO link.

In Linux, the controller layer code lives inside the hardware chip named Bluetooth controller, and it's generally a closed-source blob that lives inside the linux-firmware project. So when Intel wants to fix a bug or ship new functionality for my AX200, they update a targeted blob for the controller in this repo.

Host layer - A little bit higher

The host layer implements L2CAP (Logical Link Control and Adaptation Protocol) to make ACL more robust, which segments packets, adds error control and does not allow packets to overflow the ACL channel. It allows isochronous communication (in-order packets), necessary for a good audio experience. Other protocols, such as RFCOMM (used as a replacement for serial cables) and SDP (fundamental protocol useful for discovery among devices), sit on top of L2CAP.

In Linux, the kernel implements the host layer and the userspace communicates with it via sockets.

HCI - A protocol to glue them all

Be it with L2CAP and ACL or directly sending or receiving voice packets through SCO/eSCO, the host layer needs a way to communicate with the Bluetooth controller. To allow both pieces to talk to each other, SIG created the HCI (Host Controller Interface) protocol.

One of the ways that the Linux kernel implements the HCI layer is through the Linux USB API. The kernel encapsulates the incoming ACL/SCO packets into HCI packets and then to USB packets. The controller receives these USB packets and assembles them into ACL/SCO packets. As a sender, the controller performs the opposite flow. Even when using a Bluetooth keyboard or mouse in Linux, you're somehow using USB to make it work. How wild is that? (This might not be the case when the controller uses UART or RS232 for the HCI transport).

In Linux, the translation of ACL/SCO packets and USB packets happens in the btusb module.

The most relevant HCI packets for these calls are:

- Commands and Events: The host can modify the controller state or receive events. Similar to Netlink sockets changing the network configuration in Linux.

- Data packets: Send and receive ACL or SCO data

Wrapping it up

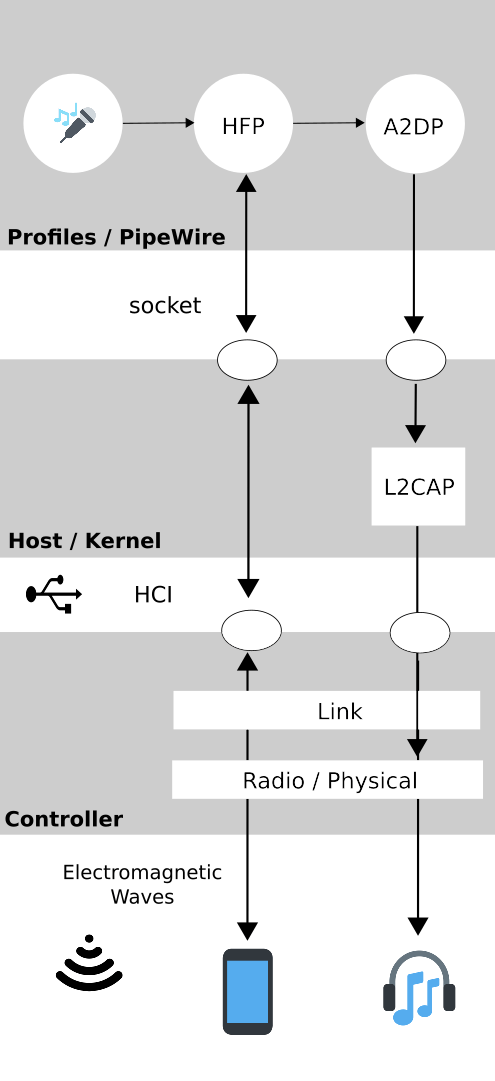

After briefly touching the Bluetooth stack, the following diagram exposes all the devices and pieces connected.

Figure 2.1 of Bluetooth Core Specification Version 5.3 | Vol 1, Part A. The top diagram is the userspace PipeWire graph that we'll see in the next section.PipeWire

PipeWire is an application responsible for routing multimedia data between applications and devices. Before PipeWire, the only two alternatives for audio manipulation on Linux were Jack for professional audio and Pulseaudio for consumer audio. Now the goal is to cover these two use cases and become the de-facto Linux sound server.

Like Jack, PipeWire builds a graph of connected devices and applications. It schedules, resamples and routes this data flow through all of these interconnected nodes in the graph. These nodes can have configurable and dynamic buffers holding audio data. Bigger buffers yield more latency to stream through all connected nodes but consume less processing power.

As a practical example, here are the nodes involved in the Whatsapp call:

- the source node receives PCM data from the microphone when I speak something. The sink node managing the HFP connection wakes up, gets this PCM data, and encodes the audio with the mSBC codec. Then, it writes this data to a socket. The caller can hear me.

- another source node receives mSBC data from the socket and decodes it back to PCM data. This audio contains the caller's voice.

- the sink node from the A2DP connection linked to the headphone encodes the audio with AAC codec and writes it to the socket

To juggle all of these pieces, PipeWire ships with some programs.

- The daemon (

pipewire-core) is responsible for holding the properties of the registered nodes and other objects. In addition, it exposes events and the current state of the processing graph. For example, The cli pw-mon connects to the daemon through a socket exposed by PipeWire and monitors all the nodes and other entities creation and updates. - The session manager (

pipewire-media-session) performs device discovery, policy logic for sandboxed applications, and the node's configuration. It doesn't hold any state of the objects, which is the responsibility of the daemon. The PipeWire session manager is a Proof of Concept, and WirePlumber will replace it eventually. This module even lived in a directory calledexamplesin PipeWire source code, but now it's a separate repo included as a git submodule in the main codebase. pipewire-pulseserver translates clients that use Pulseaudio API to its own API. Because of this, apps like Spotify, Chrome, Zoom, Firefox don't need to rewrite their application to use the new PipeWire API.

Bluetooth on PipeWire

The BlueZ project implement Bluetooth on Linux desktop.

BlueZ comprises a kernel subsystem that implements the Host Layer - L2CAP logic, socket infrastructure and assembling/disassembling HCI packets.

Its userspace counterpart is in a daemon called bluetoothd, which exposes its interface to other apps using D-Bus APIs.

Some command-line tools like bluetoothctl and btmon are also available to introspect and configure Bluetooth in Linux.

PipeWire is one of the consumers of these D-Bus APIs provided by bluetoothd.

To implement the A2DP profile, the session manager needs to send some D-Bus method calls and listen to some signals from the Media API section.

One of these interactions are:

- It needs to register itself as a media endpoint to receive updates on the connections. It calls the

RegisterApplicationmethod onorg.bluez.Media1interface. - After the computer pairs the device, PipeWire calls the method

SetConfigurationonorg.bluez.MediaEndpoint1, to set the agreed codec between the host and the device. - When PipeWire requests that the node start playing some audio, it will send the method

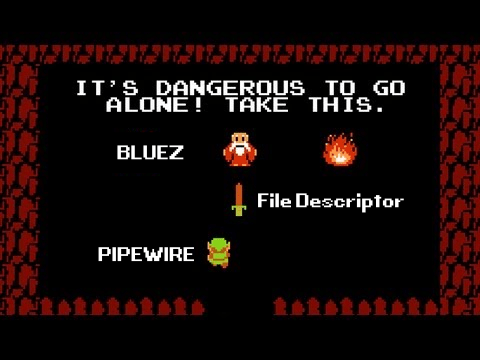

Acquireto theMediaTransport, which returns a file descriptor.

With this file descriptor, Pipewire can write the audio to the socket interfacing with the kernel directly (the encoding is happening on the PipeWire side).

Under the hood, BlueZ opens a socket with socket(PF_BLUETOOTH, SOCK_SEQPACKET, BTPROTO_L2CAP), but applications using the API don't need to care about this complexity.

Wrapping it up

According to the PipeWire docs, a node is an element that consumes and/or produces buffers containing data. A port is attached to a node and a direction (input for sink devices or output for source devices). In the end, a link connects two ports together.

+------------+ +------------+ | | | | | +--------+ Link +--------+ | | Node | Port |--------| Port | Node | | +--------+ +--------+ | | | | | +------------+ +------------+

So, when playing music through a speaker, PipeWire creates a Spotify node with two ports because the sound is stereo and two links connected to the two ports of the speaker node.

When pipewire-pulse streams data from the Spotify process, PipeWire manages the data flow through these components until audio is played on the speaker.

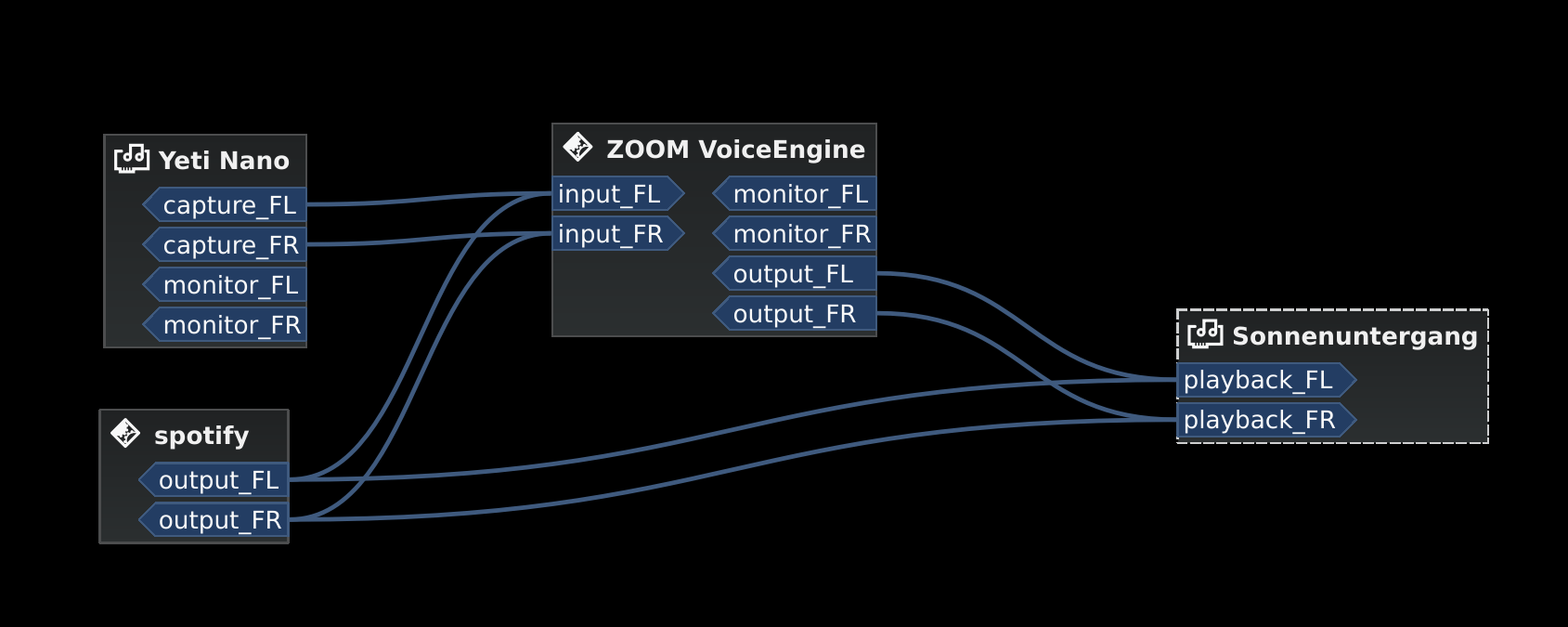

To sum it up, here is the PipeWire graph of the Whatsapp call setup:

pw-dot command-line tool. Even though node 68 has two ports, the sound won't have a stereo-like quality because HFP only supports mono audio.Whatsapp Calls

Now that I explained the basic concepts from Bluetooth and PipeWire, it's time to tell the journey of how I tried to make the setup work.

Improving the feedback loop

It's impossible to call yourself on Whatsapp, and I didn't want to nag other people into being my guinea pigs. To test that things were working, I opened two sessions of a Zoom channel, one connected via Pixel 3 and another with the computer. But, these tests proved to be a nuisance because when I needed to restart PipeWire with a different configuration, the app lost its connection, and the audio didn't work anymore. I needed to leave the meeting and join again.

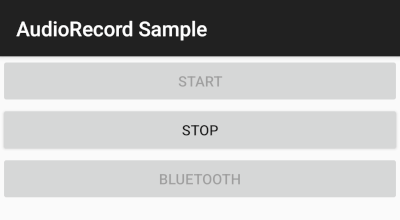

Looking into how to speed up the feedback look, I found the project android-audiorecord-sample. This project opens an HFP call and provides some on/off knobs to save the incoming voice from the caller into a file, but the idea was to stream back the audio receiving back to the sender, so I can hear in real-time how my voice looks like. I had a working app after changing the Java code and fixing some permission logic and SDK version issue. After some refactorings, I managed to stream the received audio from the HFP link back to the computer.

BLUETOOTH and the START button and hear me talking on the headphone through an HFP connection.Configuration

In theory, everything would work out of the box.

But with the default configuration, that wasn't happening, and the smartphone wasn't even connecting.

To make it work, I had to disable HSP and enable mSBC explicitly. This configuration lives in /usr/share/pipewire/media-session.d/bluez-monitor.conf.

properties = { # By default, CVSD codec was being used when I tested it Bluez5.enable-msbc = true # Excluding all profiles, but specially hsp_hf here otherwise it default to HSP connection # HSP doesn't support mSBC, which is bad bluez5.headset-roles = [ hfp_hf ] } rules = [ { matches = [ { # Matching all bluetooth devices device.name = "~bluez_card.*" } ] actions = { update-props = { # Pipewire automatically connects to Pixel 3 and Bose headphone bluez5.auto-connect = [ hfp_ag a2dp_sink ] } } }

In the future, Wireplumber will replace pipewire-media-session, and these configurations will be done via a Lua script.

The migration will be smooth when that happens because the code that handles these keys and values are inside a SPA (Simple Plugin API) plugin (living in libspa-bluez5.so) used by both session managers.

Additionally, in the middle of 2021, after I started the experiments, PipeWire added the concept of a "quirks" database, which enables and disables mSBC support automatically based on a list of devices or kernel versions.

Maybe the bluez5.enable-msbc option is outdated, but it doesn't hurt to force it just to be sure.

After PipeWire used mSBC and even auto-connected, I would be happy to start using it. However, I found more issues.

I can't hear what other people are saying

The first issue I encountered was that the volume from the caller was ultralow, almost inaudible.

After a quick investigation, I noticed that the file $HOME/config/pipewire/media-session.d/restore-stream was the culprit.

This file stores nodes' volumes and mutes their state, so the user doesn't need to actively change it when a node appears.

The key representing the source node had a low volume there for whatever reasons.

Changing the slider volume in Pulseaudio Volume Control was useless also.

Changing the volume to 1.000000 in the file directly fixed the issue.

I cannot reproduce this issue any longer after updating the file and reloading the session manager.

I barely hear what other people are saying

Now at least I could listen to the caller. But, it was lower than usual, and I needed to adjust the headphone volume after accepting a call. I needed to readjust it to the old value when I finished the call.

An option would be to adjust all applications to play with low volume, but not ideal. To really fix it, I needed to find out where the volume was being decreased: Is it PipeWire or Android that's proactively changing the volume?

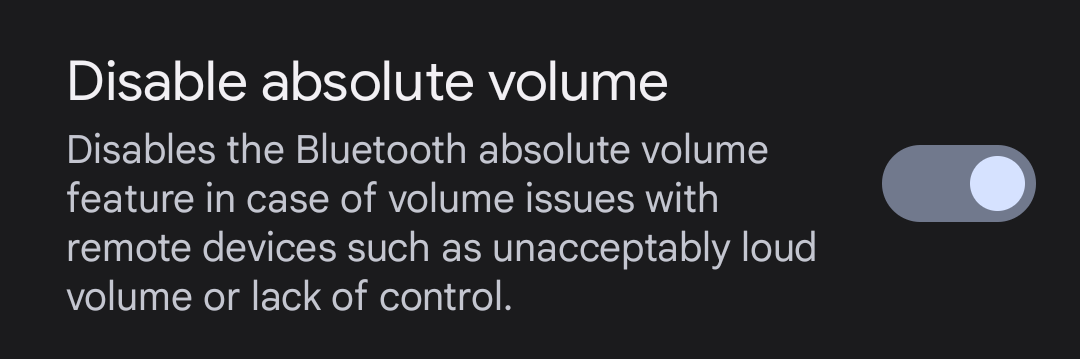

After googling about it, I found a Reddit thread that mentions that users should toggle off the Absolute Bluetooth Volume option.

With this feature, Android is the owner of the volume control on the other end and assumes that the sink will adjust it accordingly. Spoiler alert: PipeWire didn't modify it.

After disabling it, the volume is compatible with the computer's volume.

I'm almost sure that this is not the only factor that impacts the call volume. Some days the volume is good even with this option disabled or too low with the option enabled.

Computer is playing Phone audio

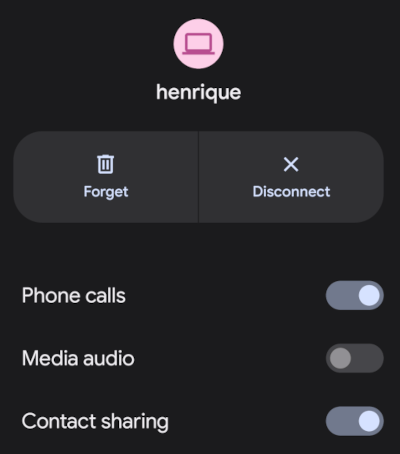

Not related to the calls itself, but one annoying detail is that whenever I tried to play some video on Netflix or Youtube on the Pixel 3, the audio was playing on the default computer sink, the speaker. This was happening because, besides acting as an HFP Handsfree role, the smartphone also was an A2DP Source.

That feature of playing audio from Pixel 3 through the computer might be interesting for the future, but not right now, so I simply disabled this option.

The call is chopping a lot occasionally

Sometimes, the call was cut, and I couldn't understand what the other person said. Connecting the headphone with a cable made the audio work again. Looking at PipeWire logs, there were lots of errors when writing on the L2CAP/A2DP socket (the Bluetooth link with the headphone).

This issue is annoying because I couldn't reproduce it deterministically. And, as usual, these are the worst issues to troubleshoot. Some days I could reproduce it faithfully, but I couldn't most days. Because this investigation was trickier, I'm separating it into different subsections.

Why is the socket write bailing out?

In the logs, I was seeing the line coming from a2dp-sink file: a2dp-sink 0x55ea222c72c8: Resource temporarily unavailable. This message is a readable error for EAGAIN with code error 11.

The send socket call with a EAGAIN error means that this non-blocking operation is refused, and the userspace counterpart should try again later.

This behaviour also manifests itself on TCP/IP calls.

The kernel is rejecting the write in this part of code and the simplified version is shown below:

struct sk_buff *sock_alloc_send_pskb(struct sock *sk, int *errcode) { if ((sk->sk_wmem_alloc - 1) < sk->sk_sndbuf) break; sk_set_bit(SOCKWQ_ASYNC_NOSPACE, sk); set_bit(SOCK_NOSPACE, &sk->sk_socket->flags); err = -EAGAIN; goto failure; skb = alloc_skb_with_frags(...); return skb; failure: *errcode = err; return NULL; }

A new sk_buff is allocated and connected to the socket only if sk_wmem_alloc field is smaller than sk_sndbuf field.

When a new buffer arrives into the kernel, the sk_wmem_alloc increases its size.

By default, the socket sets the sk_sndbuf value from /proc/sys/net/core/wmem_default (212992 by default in my machine).

But, Pipewire sets this socket property to a lower value with a setsockopt call passing the SO_SNDBUF parameter.

PipeWire multiplies the write MTU of the device by two. As an example, the Bose headphone has an MTU of 875.

Naive me thought: "It's such a low value. I will increase the size of the buffer. That will solve it."

So, instead of multiplying by two, I changed the PipeWire code to multiply by 5 to check what happened.

However, it only made matters worse because when the EAGAIN error happened, the audio didn't catch up, and I could only hear silence after the first hiccup. Then some audio after some seconds and then silence again.

I couldn't find the reasoning for setting a low buffer on the PipeWire codebase. Still, I could trace back why the two-factor multiplication is there from a Pulseaudio commit and the forum discussion.

Pulseaudio/PipeWire decreases the buffer size to avoid lags after "temporary connection drops". Logically, the error is one layer below, and I needed to check why the buffers were not emptied on time.

Gimme data - How eBPF became my best friend

I hit a wall. Looking at the BlueZ kernel code, I didn't know why the socket was full and not accepting new buffers. And why is this happening only occasionally?! Looking at logs wasn't helping me much, and I needed a new approach.

That's when I stumbled upon eBPF (Extended Berkeley Packet Filter). eBPF is a recent technology that allows extending the kernel without recompilation or adding new modules. It supports many features, one of which is to plug some hooks into functions and log their parameters. This allowed me to get my feet wet starting with stackcount that checks the number of invocations of a function and a rudimentary stack trace. Then I started creating my own scripts to log some interesting functions.

Looking at logs from different functions simultaneously proved to be hard to follow. Besides, it was difficult to extract historical data to compare when everything was fine and days when nothing worked. One thing led to another, and I created bluetooth_exporter as a tool to help me solve this issue.

This tool exports Prometheus data from what's happening on the Bluetooth layer in the kernel. The repo also includes a docker-compose with Prometheus and Grafana setup for easy integration. Besides other metrics, I could see:

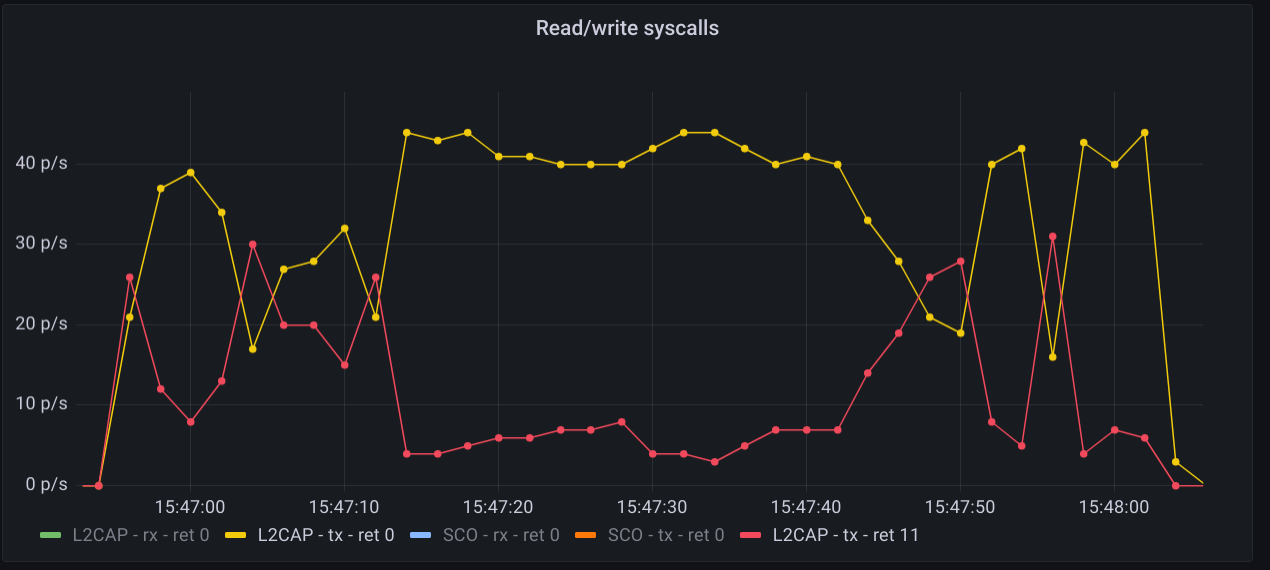

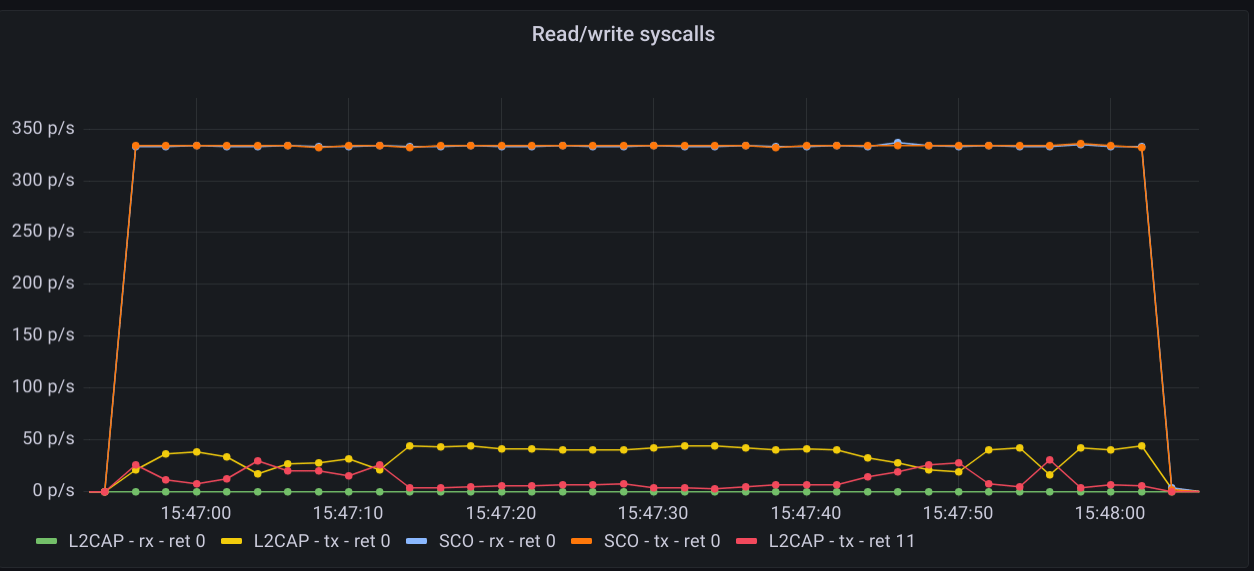

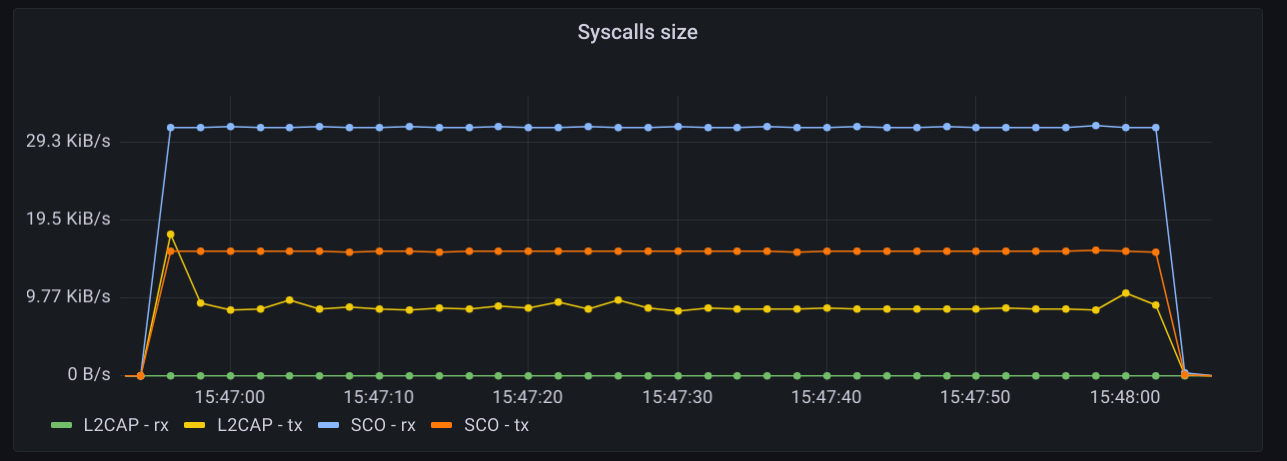

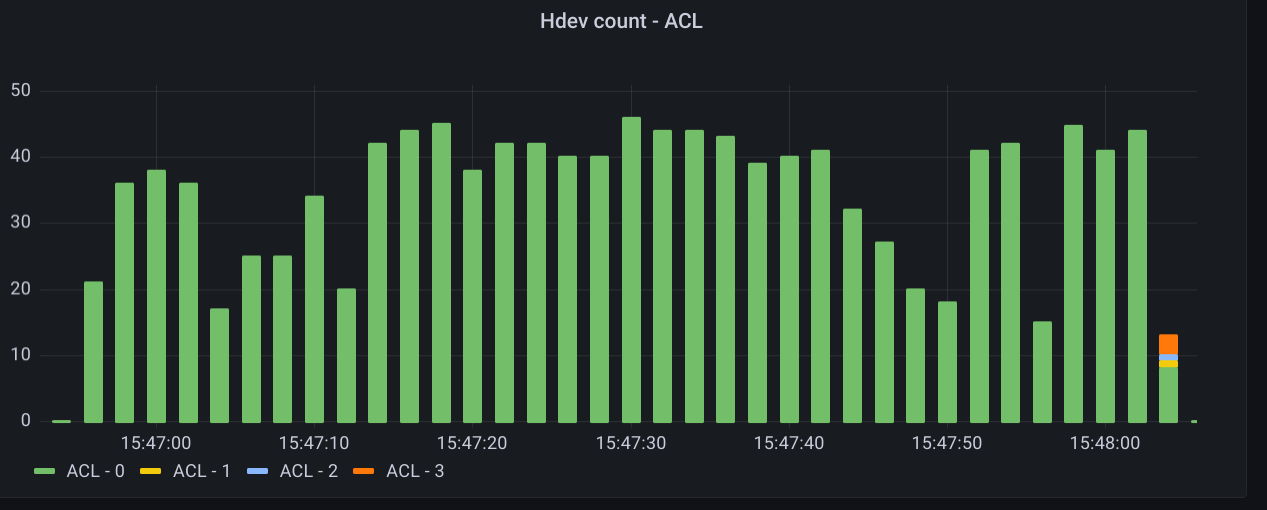

- The number of write syscalls from Bluetooth sockets and their return codes

- The time an HCI packet takes to pass through the USB layer

- The interval that an ACL packet takes to be acknowledged

The repo README provides a more in-depth explanation of these features and where they're hooked on the kernel. I'm pretty satisfied because it's generic enough to be used in other contexts, like correlating the codec change with the Bluetooth throughput. Keep in mind that nothing in this repo is PipeWire specific.

Why the EAGAINs?

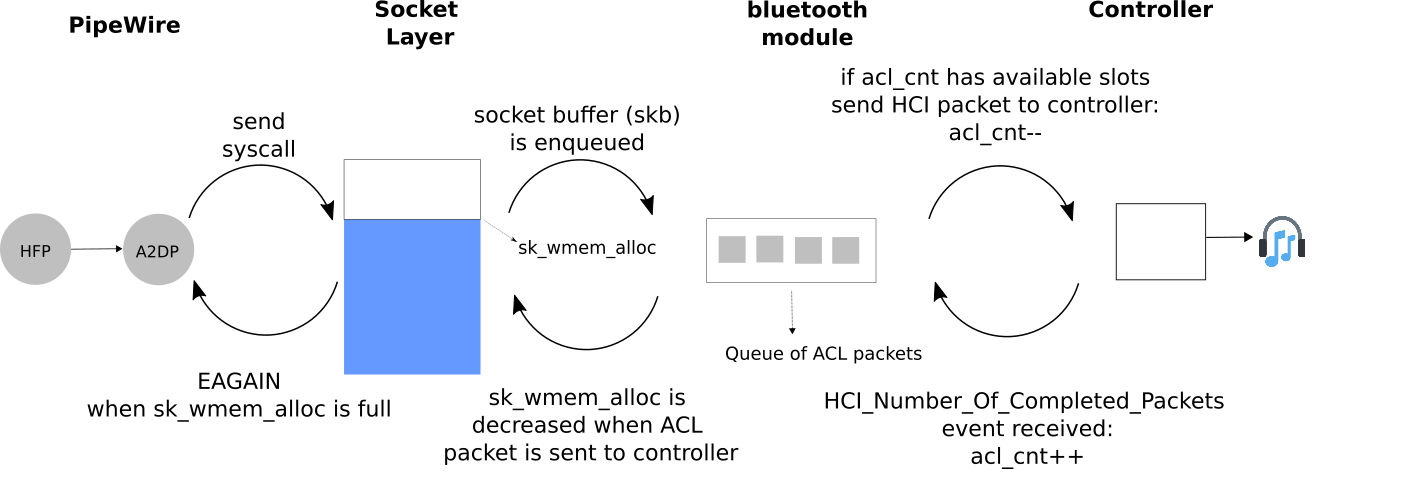

Before jumping to conclusions, we need to understand some of the layers an ACL packet needs to pass before eventually reaching the controller.

- When a Bluetooth controller is being initialized, the kernel sends a

HCI_Read_Buffer_SizeHCI command to the controller. The returned value signals the total packets that the controller can process concurrently. The kernel stores the ACL field in a field calledacl_cnt. In the case of my controller (AX200), the value is 4 slots for ACL and 6 slots for SCO. - Whenever an ACL packet is enqueued, the kernel decreases the

acl_cntby 1. This represents that the controller is "busy" with that outgoing packet. In addition, when the ACL packet is scheduled, thesk_buffis destroyed and, consequently, the higher levelsk_wmem_allocdecreased to accept a new buffer from userspace. - If a new packet arrives and the value of

acl_cntis 0, no new packet is sent to the controller. - Whenever an ACL packet is processed by the sink device (Bose headset), the controller sends a

HCI_Number_Of_Completed_Packetsevent. Then, theacl_cntis incremented, and the kernel can now send new ACL packets to the controller.

The acl_cnt has a similar purpose as sk_wmem_alloc field but in a lower layer.

Looking at the Grafana panels from bluetooth_exporter helped me identify that the acl_cnt was always 0 when the audio was chopping, and the time to receive a HCI_Number_Of_Completed_Packets was longer than usual.

I found our bottleneck!

The controller could not acknowledge the ACL packets as quickly as packets were arriving from userspace.

Possible explanations

Knowing why this happens is tricky because the controller is a black box, which offers almost no introspectability. The hunch I have is that the 2.4Ghz is pretty noisy at some moments, and the controller needs to spend more time than usual on retransmissions and acknowledgements from the soon-to-be delivered packets. It would explain why I can only reproduce this in some days. Or another option is that it's simply a specific bug in the controller or the headphone taking too long to acknowledge the ACL packets.

EAGAIN/11.

SCO-rx is 48, and SCO-tx is 96.

acl_cnt is always 0 during the time EAGAINs are returned.Possible solutions

A possible workaround would be to try to have a less reliable call. I found an interesting quote from the specification searching how to achieve that.

eSCO traffic should be given priority over ACL traffic in the retransmission window. – Bluetooth Core Specification Version 5.3 | Vol 2, Part B. Section 8.6.3

eSCO achieves a more reliable connection than SCO by reserving additional slots for retransmission if needed.

This field is called Retransmission_Effort.

Also, there is a configured value called Maximum Latency, which is the time in milliseconds it waits before giving up on the packet counting also the retransmission.

Maybe I could tweak these two settings to use fewer slots and leave more ACL slots for the headphone communication.

Sniffing the HCI commands and events involved in the eSCO handshake, I stumbled upon a promising path. The HCI_Enhanced_Setup_Synchronous_Connection command configures the current parameters of an existing eSCO connection.

This command can change 23 parameters related to the current transport.

I copied the same parameters and modified only the Max_Latency and Retransmission_Effort for the call.

For that, I wrote a ruby script that sends a crafted hcitool cmd with the same binary data.

Unfortunately, that didn't work, and the controller replied with the following HCI event:

> HCI Event: Command Status (0x0f) plen 4 #101928 [hci0] 382.386610 Enhanced Setup Synchronous Connection (0x01|0x003d) ncmd 1 Status: Invalid HCI Command Parameters (0x12)

That's a bit cryptic because I had no idea what went wrong. I simply knew that the controller considers any of the 23 parameters as invalid.

Another brute force approach I thought of was to change the kernel code so these parameters could be sent directly by the kernel at the beginning of the eSCO "handshake". There is no way to set this up in userspace, so I changed the following kernel code in this section.

if (conn->pkt_type & ESCO_2EV3) cp.max_latency = cpu_to_le16(0x0008); else // Before this was 0x000D cp.max_latency = cpu_to_le16(0x0008); // Before this was a 0x002 cp.retrans_effort = 0x00; hci_send_cmd(hdev, HCI_OP_ACCEPT_SYNC_CONN_REQ, sizeof(cp), &cp)

Trying to connect with the modified kernel didn't work because I could see that Pixel 3 wasn't accepting the eSCO negotiation.

> HCI Event: Synchronous Connect Compl.. (0x2c) plen 17 #2319 [hci0] 29.535444 Status: Unsupported LMP Parameter Value / Unsupported LL Parameter Value (0x20) Handle: 0 Address: XX:XX:XX:XX:XX:XX (Google, Inc.) Link type: eSCO (0x02) Transmission interval: 0x00 Retransmission window: 0x00 RX packet length: 0 TX packet length: 0 Air mode: Transparent (0x03)

The HFP/eSCO connection was live but downgraded to the worse CVSD codec. After that happened, I simply gave up.

I don't know if there is a bug with the closed-source controller, which would require me to buy a physical Bluetooth packet sniffer or even in the Android Bluetooth stack, which uses Bluedroid instead of BlueZ. It's better to just buy a new 15 euro Bluetooth USB stick. One communicates with the HFP/eSCO Pixel 3, and the other takes care of the A2DP/L2CAP Bose headphone.

Calls through the speaker

Sometimes I use the speakers and not the headphone. However, placing calls with it is a bad idea because of the echo.

Luckily, PipeWire ships with a module called echo-cancel.

It uses the project webrtc-audio-processing, created initially for Pulseaudio, to have the echo cancellation logic of WebRTC from Chromiums codebase into a standalone library.

Because of this project, PipeWire can use Chrome's same top-notch echo cancellation algorithm.

The module inclusion is simple, and it plugs by the default sink/source, but I didn't find a way to point to a specific node.

The speaker and the microphone are already the default sink/source, so that's not an issue for now.

Add the following line on /usr/share/pipewire/client.conf.

context.modules = [ # Other modules # ... { name = libpipewire-module-echo-cancel } ]

pw-dot image from this setupClosing remarks

Bluetooth is a powerful and complex technology. The current setup is particularly fragile. Some days I have pairing problems with the devices and need to remove, and pair/trust them repeatedly. Some other days the voice is robotic, and I need to fall back to the old wired headphone. There are probably ways to troubleshoot these issues, but honestly, I just want to make some Whatsapp calls.

I still can't complain much because Bluetooth allowed me to make this setup work, and I'm glad that SIG came up with it. But, even today, not having mandatory good audio quality for the calls is unacceptable. I also would like to ironically thank Bose for not offering the optional mSBC codec. I almost bought a Sony equivalent instead of this high-end headphone. I could simply connect the smartphone with the headphone without the Linux bridge if I went for it. In hindsight, maybe it was better because the investigation and this post wouldn't exist otherwise.

The real kudos go to the PipeWire maintainers, though. Namely Tim Waymans for creating and maintaining the whole thing and P V and Bao, Huang-Huang for actively improving Bluetooth support on PipeWire (at least these were the most prominent faces I saw from the issues). Unfairly, I skipped many contributors of PipeWire and BlueZ, but I'm thankful for all of them.

As for the calls, Whatsapp supports native desktop calling, but only on Windows and macOS. Maybe someday, they will port it for Linux, which obsoletes the Bluetooth setup. To add insult to the injury, in the meantime, I convinced my mother to switch to Signal, which does support Desktop Calling. Surprisingly, she switched not because of privacy but because the quality of the call was better.

The destination of receiving Whatsapp calls with Bluetooth and Pipewire was disappointing, but the journey of deep-diving on the Linux kernel, Bluetooth, PipeWire, and eBPF was the real reward.